Face Detection - Google ML - Android Studio - Kotlin

Detect Face using Google/Firebase ML Kit

In this tutorial, we will detect the face(s) from an image. We will use to get the Bitmap from an image in the drawable folder, but we will also show how you may also get the Bitmap from Uri, ImageView, etc. Using the ML Kit Face Detection API, you can easily identify the key facial features & get the contours of detected faces. Note that the API only detects the faces; it doesn’t recognize the people.

With the ML Kit Face Detection API, you can easily get the information you need to perform tasks like embellishing selfies & portraits or generating avatar(s) from the user's photo. Since the ML Kit Face Detection API can perform Face Detection in real-time, you can use it in applications like video chat or games that respond to the player's expressions.

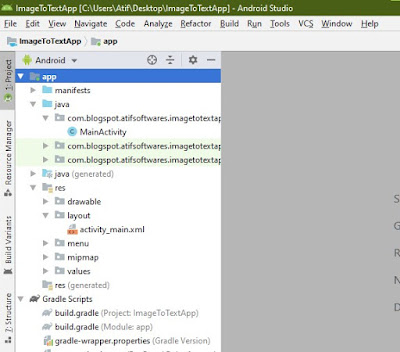

We will use the Android Studio IDE and the Kotlin language.

Video Tutorial

Coding

build.gradle

dependencies {

implementation 'androidx.core:core-ktx:1.7.0'

implementation 'androidx.appcompat:appcompat:1.4.2'

implementation 'com.google.android.material:material:1.6.1'

implementation 'androidx.constraintlayout:constraintlayout:2.1.4'

//Face detect library

implementation 'com.google.mlkit:face-detection:16.1.5'

testImplementation 'junit:junit:4.13.2'

androidTestImplementation 'androidx.test.ext:junit:1.1.3'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.4.0'

}

activity_main.xml

<?xml version="1.0" encoding="utf-8"?> <ScrollView xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" tools:context=".MainActivity"> <LinearLayout android:layout_width="match_parent" android:layout_height="wrap_content" android:orientation="vertical"> <!--ImageView: Original Image that will be used to detect & crop face--> <ImageView android:id="@+id/originalIv" android:layout_width="match_parent" android:layout_height="wrap_content" android:adjustViewBounds="true" android:src="@drawable/atif01"/> <!--Button: Click to detect & crop face--> <Button android:id="@+id/detectFaceBtn" android:layout_width="match_parent" android:layout_height="wrap_content" android:text="Detect Face"/> <!--ImageView: Show the detected face as cropped image--> <ImageView android:id="@+id/croppedIv" android:layout_width="match_parent" android:adjustViewBounds="true" android:layout_height="wrap_content"/> </LinearLayout> </ScrollView>

MainActivity.kt

package com.technifysoft.myapplication import android.graphics.Bitmap import android.graphics.BitmapFactory import android.os.Bundle import android.util.Log import android.widget.Button import android.widget.ImageView import android.widget.Toast import androidx.appcompat.app.AppCompatActivity import androidx.core.graphics.scale import com.google.mlkit.vision.common.InputImage import com.google.mlkit.vision.face.Face import com.google.mlkit.vision.face.FaceDetection import com.google.mlkit.vision.face.FaceDetector import com.google.mlkit.vision.face.FaceDetectorOptions class MainActivityy : AppCompatActivity() { //UI Views private lateinit var originalIv: ImageView private lateinit var croppedIv: ImageView private lateinit var detectFaceBtn: Button //Face detector private lateinit var detector: FaceDetector //constants private companion object{ //This factor is used to make the detecting image smaller, to make the process faster private const val SCALING_FACTOR = 10 //Tag for logging/debugging private const val TAG = "FACE_DETECT_TAG" } override fun onCreate(savedInstanceState: Bundle?) { super.onCreate(savedInstanceState) setContentView(R.layout.activity_main) //init UI Views originalIv = findViewById(R.id.originalIv) croppedIv = findViewById(R.id.croppedIv) detectFaceBtn = findViewById(R.id.detectFaceBtn) // Real-time contour detection val realTimeFdo = FaceDetectorOptions.Builder() .setContourMode(FaceDetectorOptions.CONTOUR_MODE_ALL) .setLandmarkMode(FaceDetectorOptions.LANDMARK_MODE_ALL) .build() //init FaceDetector detector = FaceDetection.getClient(realTimeFdo) //1) Image from drawable val bitmap1 = BitmapFactory.decodeResource(resources, R.drawable.atifpervaiz) //2) Image from ImageView /*val bitmapDrawable = originalIv.drawable as BitmapDrawable val bitmap2 = bitmapDrawable.bitmap*/ //3) Image from Uri /*val imageUri: Uri? = null try { val bitmap3 = MediaStore.Images.Media.getBitmap(contentResolver, imageUri) } catch (e: Exception) { Log.e(TAG, "onCreate: ", e) }*/ //handle click, start detecting face detectFaceBtn.setOnClickListener { analyzePhoto(bitmap1) } } private fun analyzePhoto(bitmap: Bitmap){ Log.d(TAG, "analyzePhoto: ") //Make image smaller to do processing faster val smallerBitmap = bitmap.scale(bitmap.width / SCALING_FACTOR, bitmap.height / SCALING_FACTOR, false) //Input Image for analyzing val inputImage = InputImage.fromBitmap(smallerBitmap, 0) //start detecting detector.process(inputImage) .addOnSuccessListener {faces-> //Task completed Log.d(TAG, "analyzePhoto: Successfully detected face...") Toast.makeText(this, "Face Detected...", Toast.LENGTH_SHORT).show() for (face in faces){ val rect = face.boundingBox rect.set( rect.left * SCALING_FACTOR, rect.top * (SCALING_FACTOR - 1), rect.right * (SCALING_FACTOR), rect.bottom * SCALING_FACTOR + 90 ) } Log.d(TAG, "analyzePhoto: number of faces ${faces.size}") cropDetectedFace(bitmap, faces) } .addOnFailureListener {e-> Log.e(TAG, "analyzePhoto: ", e) Toast.makeText(this, "Failed due to ${e.message}", Toast.LENGTH_SHORT).show() } } private fun cropDetectedFace(bitmap: Bitmap, faces: List<Face>){ Log.d(TAG, "cropDetectedFace: ") //Face was detected, get cropped image as bitmap val rect = faces[0].boundingBox //there might be multiple images, if you want to get all use loop, im only managing 1 val x = rect.left.coerceAtLeast(0) val y = rect.top.coerceAtLeast(0) val width = rect.width() val height = rect.height() //cropped bitmap val croppedBitmap = Bitmap.createBitmap( bitmap, x, y, if (x + width > bitmap.width) bitmap.width - x else width, if (y + height > bitmap.height) bitmap.height - y else height ) //set cropped bitmap to croppedIv croppedIv.setImageBitmap(croppedBitmap) } }

Comments

Post a Comment